Yep, another one of these is long overdue, right?

Since the very start of the Eleven alpha, our dedicated testers are showing some serious commitment, and we have been able to identify and fix a large number of bugs thanks to their help. By now, most of the major game features have been ported from our prototype server to the new one — the notable exception being location instancing, which includes home streets.

But since without doubt the most commonly asked question is some variation of “When are you going to let more players in?”, I would like to give you an honest update in that regard from a technical point of view.

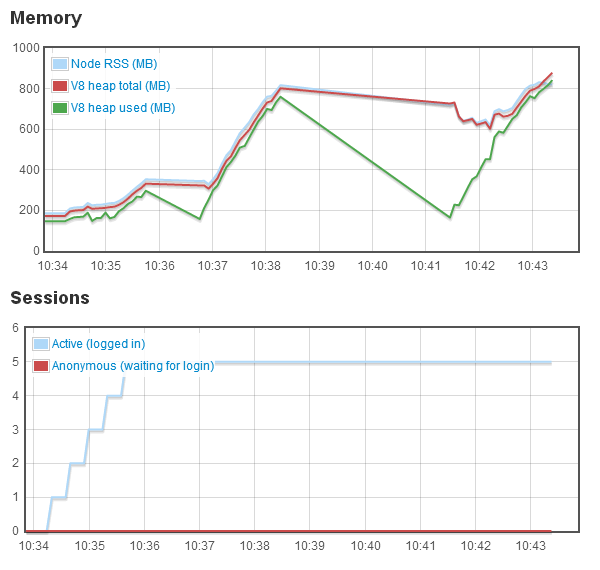

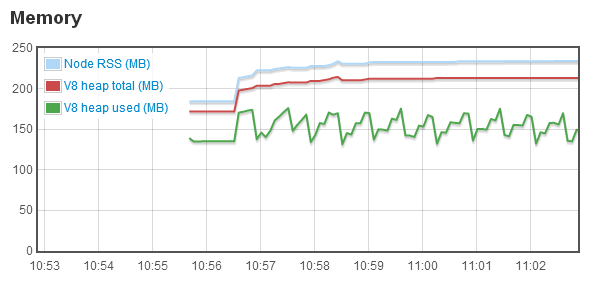

During the past three months, we very slowly ramped up the number of players, keeping a close eye on the system’s performance. While things are mostly working ok-ish (apart from crashes, numerous bugs and just generally being an alpha), it is becoming quite clear that as it stands, the server would not be able to cope with actual MMO-like player numbers.

After some analysis of the underlying issues, we now know that the problem is rooted in a core part of our architecture. Our general approach since day one has been “get the game running with as few changes as possible to the code released by TS”. In order to achieve this, we used a certain bleeding-edge Javascript language feature called Proxies to replicate how the original game server handled references between game objects and communication between server instances, because they are just perfectly suited for that purpose. In retrospect though, we probably should have paid more attention to the fact that their current implementation in Node.js is actually a dead end, and the topic is not a priority for V8.

To illustrate, here’s how “fast” some typical operations are on our server right now:

login_start: 4.12 ops/sec

groups_chat: 2,126 ops/sec

itemstack_verb_menu: 216 ops/sec

itemstack_verb: 316 ops/sec

move_xy: 4,787 ops/sec

trant.onInterval: 1,618 ops/sec

And in comparison, the same operations with the problematic parts taken out (just for the benchmark — the game would not work that way, obviously):

login_start: 6.34 ops/sec

groups_chat: 11,023 ops/sec

itemstack_verb_menu: 1,766 ops/sec

itemstack_verb: 4,454 ops/sec

move_xy: 126,096 ops/sec

trant.onInterval: 49,433 ops/sec

Unfortunately, there is no easy solution here: Reconsidering our early technology platform decisions would of course be a huge step backwards — but more intrusive modifications to the TS architecture and code, to be able to get rid of the “slow” proxies, are not a pleasant prospect either (remember, roughly a million lines of code).

We are of course pondering ways to tackle the problem more creatively, too, but without that liberating Eureka moment so far.

Sorry if all this sounds a bit bleak now, but we would rather be upfront about where we’re at, than raise expectations and then keep you in the dark about the challenges ahead. Rest assured that we are still working hard on Eleven (there are many other moving parts that are not related to this issue), and who knows, maybe there is a feasible solution around the corner that we just didn’t think of yet.

(We should probably donate to Tii more…)